The Red Canoe - A Real World Photo and Gen AI project

I completed a successful proof of concept earlier this year; using Adobe Firefly to realistically expand a landscape photo. The original image was captured in August of 2008. The second image was reimagined in June of 2023 - nearly 15 YEARS LATER.

Looking back on the original photo, it seemed so - claustrophobic… so contained and limited. I wanted to expand the image as if I had chosen a wider angle lens or backed away from the canoe; two things I may or may not have been able to do at the time of capture.

Working with Generative Fill expanded the field of view. It made the world of that photograph so much larger. This technology has also made my own world that much larger, just as Photoshop did when I first learned to use it for digital image, and then later Fireworks for web and UX design and still later, Substance 3D. Each of these tools presented new possibilities to me, new potential to create and earn a living. I’m so excited to see where Firefly will take me.

But I'm getting ahead of myself. Let's talk about the project, shall we?

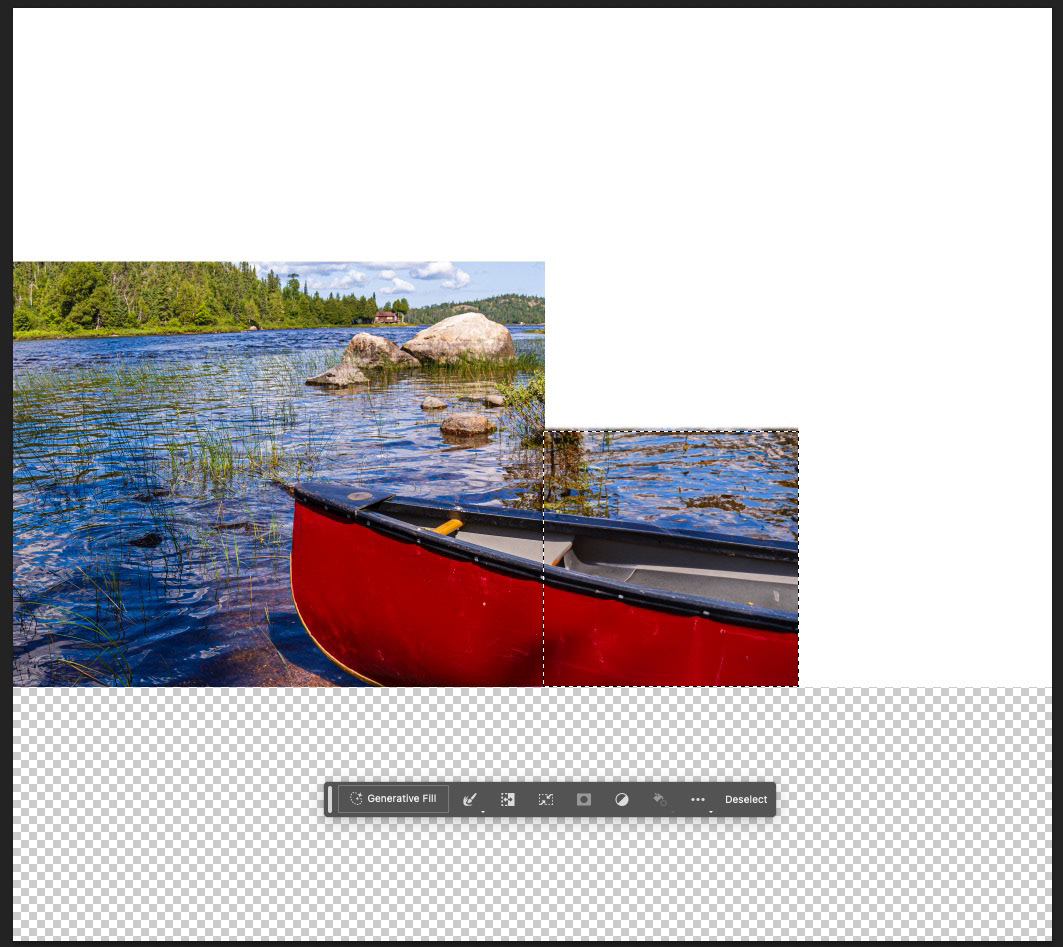

I began this project as one of several tests regarding the viability of Adobe Firefly as part of my post-production photography workflow. During this test, the resolution limitations of outpainting in Firefly had come to my attention, as well as a workaround. Firefly was limited to generating new content at a maximum of 1024 pixels on the longest edge; if your selection was larger than that, Firefly would essentially rescale the pixels to work in that larger area, resulting in a blurry, soft rendition. This was quite possibly fine for a social media post, but not for images intended for printing or viewing at large sizes.

The Workaround

I extended the canvas area to the desired dimensions first, using the Crop tool. While there is the new Generative Expand option in the Crop too, the same resolution limitation applies.

So, instead of using Generative Expand, to maintain higher quality, I used multiple, slightly overlapped 1024x1024 pixel selections, applying Generative Fill ((powered by Adobe Firefly) to each individual selection before moving on. In fairly short order I had an entirely believable scene that was easily 40% larger than the original, containing photo-realistic imagery that did not exist in the original photo.

This work is a bit tedious, I admit, but the end result was worth it.

Tip: If the drudgery is too much for you, Unmesh Dinda from Piximperfect has released a free plugin that automates the selection and Generative Fill Process for you. Learn more at this link.

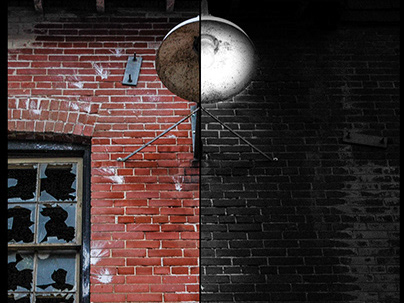

Related Tip: Blake Rudis from f64 Academy has released a great Youtube video on how to more effectively blend a large Gen Fill selection with the original image.

From there, I created a flattened version of the scene, and used a combination of Generative Fill and traditional tools like the Clone Stamp, to clean up areas such as clouds that were pure white, or rivets on the gunnels of the canoe.

The original capture from more than 10 years ago is outlined in white and did NOT include the yellow canoe. Generative Fill in Photoshop allowed me to realistically expand the image beyond its original frame. While this image is not part of my exhibition, I have printed it at 8x10 dimensions and the results are impressive, putting this image more in the category of photo-illustration than true photograph.

The final step was completed a few weeks later when I picked up an 8x10 print of the new file from a local commercial photo lab. The lab tech was blown away when I shared that at least 40% of this image was AI-generated!

I have since been discovering other helpful but less dramatic uses for Generative AI in my post-production workflow, which usually involves removing a distracting element in some of my photos, but I continue to push the limits.

This image, along with others that employ Generative Fill to a lesser extent, will become part of one of my upcoming photo exhibitions. This, to me, is the power of Generative AI; it helps me build on my vision, not replace what I do or make my creative eye redundant.

Learn More

To learn more about my testing and workflow, and other helpful resources check out these links: