Living with the (unwanted?) Creative Assistant

This is article #16 in my series on Practical Generative AI for Photographers. You can find more related content in my Generative AI for Photographers section on my Behance page.

Fair warning; this is going to be far more of an essay than a photo essay.

Let’s make one thing clear right off the top. Image manipulation began when the first photograph was captured, around 1825.

Some 200 years ago, photographers were manipulating reality through choice of lens, camera position, framing and ultimately, processing of the captured image. The general workflow of a photographer has not changed in two centuries.

In 1825 Nicephore Niepce recorded the world’s first photograph, a view from a window at Le Gras.

I won’t belabor the history of photographic technology in this article, but suffice to say that despite any efforts to the contrary, photographs have always been and always will be an artificial representation of reality. This is not good or bad; it’s just a fact. If you can agree with this statement, read on.

Where good or bad enter into the discussion revolves around human intent.

Photojournalists strive for creating the objective image, doing their best to record what is in front of them with little or no embellishment, beyond the action to make the image easier to comprehend.

Pierre Trudeau, near the end of his career, looks on as Elizabeth II signs the Constitution. (Image credit: Ron Poling/The Canadian Press.)

Photographic artists can let the pendulum swing in either direction, depending on the goals of their art.

Andy Warhol at the Jewish Museum (by Bernard Gotfryd) : Flickr/Library of Congress

Landscape photographers (like me, for example) can plan and wait for just the right time and the right light to capture the most dramatic image of a specific location, but we often work with the technology of the day to produce what we “saw” or felt in our mind's eye. This could be through the choice of film, chemical processing, physical printing or the use of software.

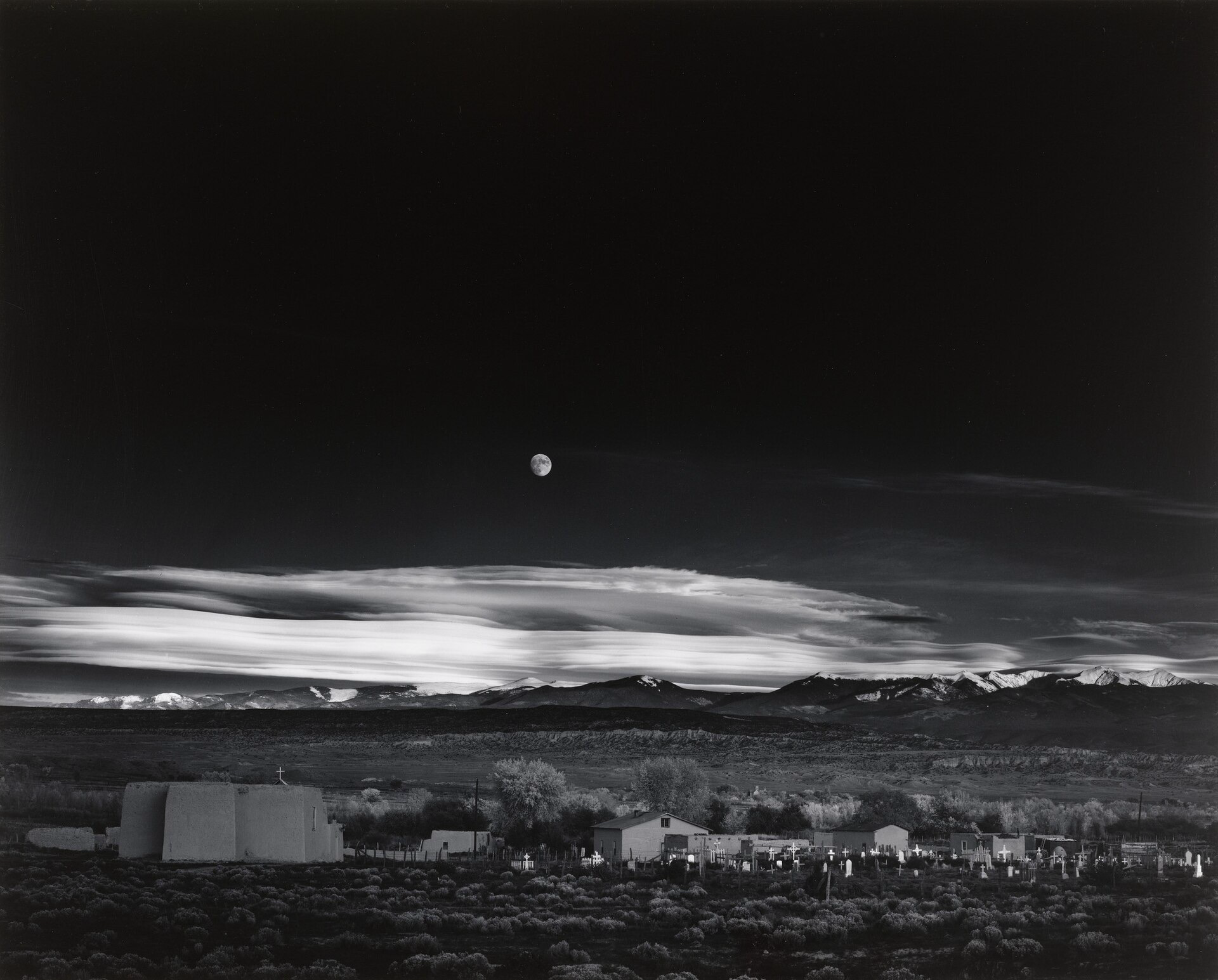

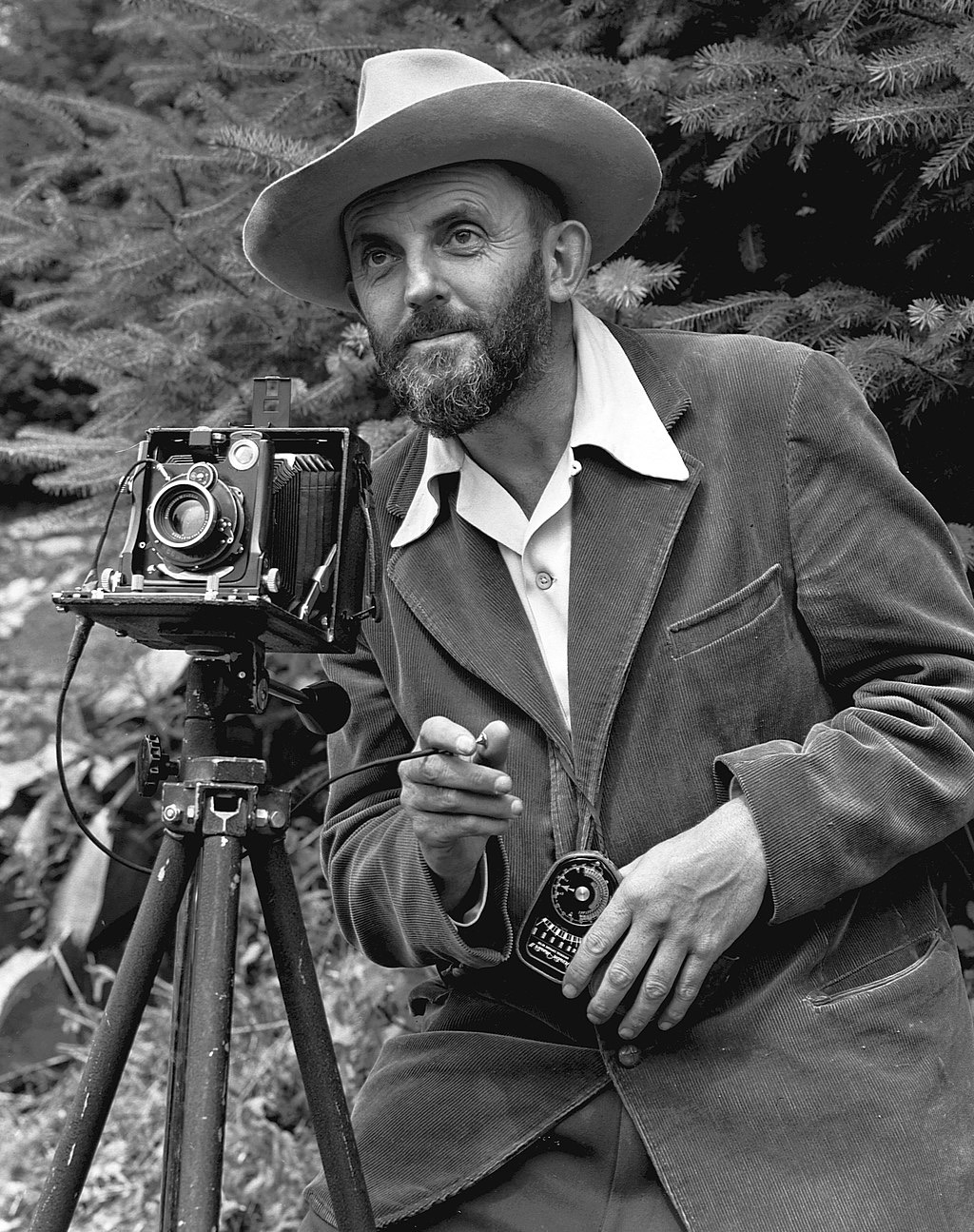

As I have pointed out in a previous article, Ansel Adams’ Moonrise, Hernandez, New Mexico, did not look like this depiction at the time of capture, or at the time of the first printing. IMO, I think Ansel would have embraced the changes in photographic and imaging technology.

And, speaking of technology and computer software, the last few decades have opened up other options (and temptations) to photographers to further “manipulate,” "enhance," or “correct” the final image. Again, this in itself is neither good nor bad.

Since nearly its inception, tools like Photoshop have enabled technicians, artists, designers and photographers to alter the reality of a photograph; remove a distracting object from a scene (a piece of litter, for example), lighten or darken part of an image and - for the most part - photographers accepted these capabilities to help improve the final image or to better communicate the story behind the photo. We likely thought very little of the impact of these features, other than that they made our jobs and lives easier.

Enter Generative AI. Generative AI has become the most quickly adopted technology in human history.

Artificial intelligence in general - and Generative AI in particular - while very powerful, is no different in that it too is a tool. It is another tool or feature to help creatives and photographers better communicate their vision. It can remove or reduce the drudge work that a photographer would normally have to run through in their normal image processing workflow, freeing them up to focus more on the message of the image itself.

Be you a casual or professional photographer, we've all been there, on location, waiting for distractions (like people or vehicles to leave the frame, or wishing we could physically move something like a garbage can.

As photographers, we can and should learn about these tools and what they can do and then - so informed - choose to embrace or avoid them.

Using Generative Remove in Lightroom to eliminate distracting tourists in the image speeds up the process and saves me time on site - critical if the lighting or weather is paramount to my image.

Deepfakes Before AI

Can these tools cause problems, can there be overreach in terms of how they are used? Yes, of course; we’ve already seen this in the form of “deepfakes,” and editorial images depicting scenes of conflict that never really happened. We’ve seen it well before the rise of Gen AI, when, decades ago, magazines like National Geographic altered the position of the pyramids of Giza in 1982 to better fit the aspect ratio of their magazine . Going deeper than that, however we learn that the photographer paid the men on camelback to ride back and forth in the scene until he got the shot he wanted. (https://medium.com/engl462/visual-deceptions-national-geographic-and-the-pyramids-of-giza-3fee6d448d0D).

It all falls under umbrella of human intent. Is the intent to enrich, engage a viewer’s experience, or is it to deceive, to falsify, to polarize viewpoints disproportionately? These are not decisions Generative AI makes for us. These are decisions we as humans, as the final arbiters of the image, must make. It's also why it remains critically important that we at the center of AI-generated content, from both the creative, production side as well as from the spectator side.

High Anxiety not Required

The anxiety and angst that many artists feel is derived from the concern that their work is being used to train these AI models without permission or compensation. This is a valid concern for some if not many of the AI models out there. But I will climb on my Adobe soapbox at this point and proudly state that Adobe Firefly's family of models:

A) does not train on customer content

B) does not scour the Internet for training material

C) trains only on content that is in the public domain, free of copyright or we have permission to train on (Adobe Stock's 300+M assets, in this case)

D) Adobe Stock contributors are compensated if their work is used to train the Firefly models. Adobe has even sent out calls for content to its contributor base for specific types of imagery to help train Firefly and make it better. If your work is selected, you get paid.

More info: https://helpx.adobe.com/ca/firefly/get-set-up/learn-the-basics/adobe-firefly-faq.html

More info: https://helpx.adobe.com/ca/firefly/get-set-up/learn-the-basics/adobe-firefly-faq.html

Make me the new Ansel Adams, please!

Another concern is that AI models will generate content in the style of specific artists. As with other trade-marked, proprietary content, Firefly either doesn't understand that part of the prompt, or those words are blocked/filtered from being used in the as part of the resulting image.

The whole point behind how Firefly was designed, is to produce content that is designed to be commercially safe for use. If the models produce content based on other specific artists, then the work is not usable commercially.

For example, when I use the following prompt in Firefly, "black and white landscape image in the style of Ansel Adams," I do indeed get black and white landscape images, but I also get an error message:

When you use language that is deemed inappropriate or not understandable by Firefly, you will still usually get a generated result, but not one that necessarily matches your prompt entirely. In this case, the proper name, Ansel Adams, was filtered out of generating results.

Other (but not all) models are not perhaps as strict about this type of behavior, but I believe with companies like Adobe adopting the "high road," more companies will fall in line.

Seeing is Believing...?

Regarding transparency and image provenance, Adobe is leading the way here by spearheading the Content Authenticity Initiative (CAI) and the creation of Content Credentials

The Content Authenticity Initiative is focused on cross-industry participation, with an open, extensible approach for providing media transparency to allow for better evaluation of content.

Content Credentials are industry-standard, tamper-evident metadata that can help add context about how a file was created or edited and who was involved in the process. Content Credentials can be customized to include all kinds of information, but within Adobe Firefly, they are used to promote transparency with generative AI.

As part of its commitment to supporting transparency around the use of generative AI, Adobe automatically applies Content Credentials to assets where 100% of the pixels are generated with Adobe Firefly, such as Text to Image.

Learn more about Content Credentials and the Content Authenticity Initiative at the links below.

Economic Disruption

A third concern is a loss of income to certain creative professions, photographers included, due to the influx of AI-generated content on stock photo web services (like Adobe Stock) and a possible reduction in custom commercial photography projects.

There will certainly be disruption; we're already seeing this in the numbers of Gen AI images available on traditional stock photo sites. However I think this will also spur a demand for authentic, non-generative content as well. The authentic image will always require the significant involvement of human creativity, be it a captured image or an artistically assembled composite.

In his LinkedIn article on how Generative AI is revolutionizing traditional photography, Executive Creative Director Seth Silver has this to say:

"... it's evident that Generative AI will continue to influence the field of photography, blurring the lines between traditional and digital art forms. As AI technology advances, the collaboration between human creativity and machine learning will likely produce even more innovative and surprising results. However, the essence of photography—capturing moments, telling stories, and expressing emotions—will remain at its core, with Generative AI serving as a powerful tool to enhance and expand the photographer's vision."

In her recent article for Imagen, 5 Ways AI Will Affect the Future of Photography Editing and Culling, photographer Penelope Diamantopoulous writes:

"With the rise of AI in photography and editing, photographers must adapt to new roles and acquire new skills to thrive in the industry. Embracing AI editing tools is crucial for staying current and efficient in a rapidly changing landscape. Photographers need to familiarize themselves with AI tools, collaborate with AI systems, and enhance their data analysis and interpretation skills."

There will be change, but there will also be opportunity. It's another reason why I think it is critical that working photographers understand AI tools and keep up with what they can - and can't - do. Those photographers who choose to work with AI to enhance their work or streamline workflows will succeed. Adaptability is key.

Personal note: I recall a science fiction novel I read recently where there were two tiers of food; generated, vat-grown protein and then the "real thing". Everyone had access to the artificially created (and considerably more humanely grown) meat, while those willing to pay or treat themselves on occasion could procure traditional organically raised meat products.

What Does it All Mean?

This story began with how humans have manipulated the captured image since - well - the first captured image. Human-made choices based on the technology available to the photographer at that time.

I would argue that we are still in that same proverbial boat; we as photographers continue to make choices, both in-camera and in processing regarding the final image.The difference is that now we have technology that can interactively assist us in creating our vision.

Our Vision. Not a computer model's vision.

I've said this before and I will say it again; humans consciously create out of a need, a desire to express, to share, to communicate, to inform. We can do it on a whim, or with much research and deliberation.

Generative AI creates content when prompted; it doesn't so on it's own, because it's bored or has an idea. It does what it does based our our request, Even if the result is unexpected, AI is creating suggestions based on our input. We decide if we use it. We decide if the result is acceptable, or requires tweaking or is only part of a larger visual concept. And if we are not happy with the result, we change the instructions to this silicone assistant. We get more specific, or provide examples of what we mean, so that it can assist us in the image creation or concepting process.

Just Say No ... or Yes

We can also choose NOT to use this technology. I could decide tomorrow not to make use of AI features in my camera like subject detection for focus, or digital Neutral filters. Could I still create the image I want? Yes, but it could likely take more time as I search for and attach physical filters, or result in fewer "keeper" photographs of a bird in flight. These AI features assist me in getting the images I want. They don't make the image for me.

The AI in Photoshop or Lightroom doesn't remove distractions from an image without our committing to that action. It could be wrong, it could miss - or include - the wrong thing.

We decide to take the assistant's advice, or not.

Our mind's eye is perhaps more relevant and necessary than ever before. Now we just have help at hand if we want it.